Hadoop fault tolerance in distributed computing

In distributed computing environments, ensuring fault tolerance is crucial to maintaining data integrity and reliability, especially when processing large volumes of data. Hadoop, with its distributed architecture and fault-tolerant design, employs several mechanisms to mitigate the impact of hardware failures, network issues, and other potential disruptions. We’ll delve into how Hadoop ensures fault tolerance in distributed computing and safeguards data integrity in big data processing tasks.

Key Takeaways

- HDFS Replication: Ensures multiple copies of data blocks to minimize data loss.

- Block Replication Strategy: Enhances fault tolerance and data availability.

- Redundant Task Execution: Minimizes the impact of node failures on data processing jobs.

- Automatic Job Recovery: YARN ensures task completion despite node failures.

- Failure Detection and Data Integrity: Continuous monitoring and integrity checks safeguard data accuracy.

Table of Contents

Replication in Hadoop Distributed File System (HDFS)

In the realm of Hadoop fault tolerance in distributed computing, the Hadoop Distributed File System (HDFS) stands out as a critical component. HDFS is Hadoop’s primary storage system, meticulously designed to handle large datasets with unparalleled reliability. One of the key strategies HDFS employs to ensure fault tolerance is data replication. This process involves replicating data blocks across multiple nodes within the Hadoop cluster.

By maintaining multiple copies of each data block, HDFS significantly minimizes the risk of data loss in the event of node failures. This replication mechanism is pivotal in safeguarding data integrity, even when individual nodes experience downtime or hardware issues. The default replication factor in HDFS is typically set to three, meaning that each data block is stored on three different nodes. However, this factor is configurable and can be adjusted based on specific requirements and the criticality of the data.

When a node in the cluster fails, HDFS automatically re-replicates the data blocks that were stored on the failed node to other healthy nodes, ensuring continuous availability and durability of data. This self-healing capability of HDFS is one of the reasons it is widely adopted in big data environments.

Moreover, the replication process in HDFS is designed to be highly efficient. HDFS balances the replication load across the cluster to avoid any performance bottlenecks, ensuring that the system remains responsive and robust. By spreading the replicas across different nodes, and ideally across different racks, HDFS also provides a level of disaster recovery, protecting data even if an entire rack fails.

In summary, the replication mechanism in HDFS is a cornerstone of Hadoop fault tolerance in distributed computing. It not only ensures data reliability and availability but also enhances the overall robustness and resilience of the Hadoop ecosystem. This makes HDFS an indispensable component for organizations relying on Hadoop for their big data processing needs.

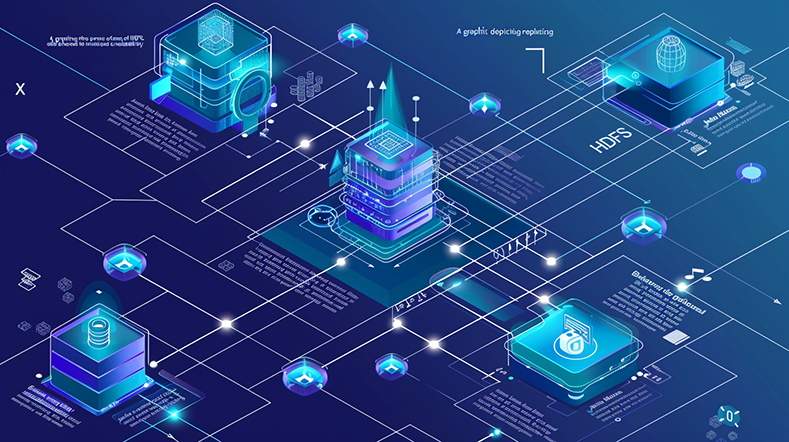

Data Replication Strategies

In the context of Hadoop fault tolerance in distributed computing, the data replication strategies employed by HDFS are pivotal. HDFS (Hadoop Distributed File System) uses a strategy known as block replication to ensure data integrity and availability. This strategy is essential for maintaining high levels of fault tolerance within a distributed computing environment.

When a client writes data to HDFS, Hadoop divides the data into fixed-size blocks. Each of these blocks is then replicated to multiple nodes across the cluster. This replication process is not just a simple duplication of data but a sophisticated mechanism to ensure that data remains accessible even if one or more nodes fail. By distributing copies of the data blocks to different nodes, HDFS can withstand node failures without compromising data availability.

The replication strategy in HDFS significantly enhances fault tolerance. If a node that holds a replica of a data block fails, HDFS can retrieve the required data from another node that holds a copy of the same block. This ensures that the system continues to function smoothly without data loss. Additionally, HDFS continuously monitors the health of data blocks and replicates them as needed to maintain the desired replication factor.

The default replication factor in HDFS is typically set to three, meaning each data block is stored on three different nodes. However, this can be adjusted based on the criticality of the data and the specific needs of the organization. By replicating data blocks across multiple nodes, HDFS ensures that even in the event of hardware failures or network issues, data remains accessible and intact.

Moreover, the replication strategy also contributes to load balancing. By distributing data across various nodes, HDFS avoids performance bottlenecks and ensures efficient utilization of the cluster’s resources. This not only enhances fault tolerance but also optimizes overall system performance.

The block replication strategy employed by HDFS is a cornerstone of Hadoop fault tolerance in distributed computing. It ensures that data remains available and reliable, even in the face of node failures, thereby providing a robust and resilient framework for big data processing.

Redundant Task Execution

The concept of redundant task execution is paramount. This strategy is employed within Hadoop’s MapReduce framework to ensure that data processing tasks are completed reliably, even in the face of node failures or other issues.

In the MapReduce framework, each task is executed redundantly across multiple nodes in the cluster. This means that if a task is assigned to a particular node and that node fails or encounters issues, Hadoop automatically retries the task on another available node in the cluster. This process of automatic task retry is crucial for maintaining the integrity and continuity of data processing jobs.

The redundant task execution mechanism significantly enhances fault tolerance by minimizing the impact of node failures on data processing. If a node fails, the system quickly reallocates the task to another node, ensuring that the job continues to progress without significant delays. This redundancy ensures that the overall data processing workflow remains uninterrupted, which is essential for maintaining high levels of system reliability and performance.

Furthermore, the MapReduce framework continuously monitors the health and performance of nodes within the cluster. If a task is detected to be running slower than expected or if a node shows signs of instability, Hadoop proactively reassigns the task to another node. This proactive management helps in maintaining optimal system performance and ensures that tasks are completed within the expected timeframes.

The benefit of this redundant task execution strategy is twofold. First, it ensures that data processing jobs are not derailed by individual node failures, which enhances the robustness and reliability of the Hadoop ecosystem. Second, it optimizes resource utilization within the cluster, as tasks are dynamically reallocated to available nodes, ensuring efficient use of computing resources.

In summary, the redundant task execution mechanism is a critical aspect of Hadoop fault tolerance in distributed computing. By ensuring that tasks are executed across multiple nodes and automatically retried in case of failures, Hadoop maintains the reliability and efficiency of data processing operations. This approach not only enhances fault tolerance but also ensures seamless job completion, making Hadoop a reliable platform for handling large-scale data processing tasks.

Automatic Job Recovery

A crucial feature of Hadoop fault tolerance in distributed computing is its automatic job recovery mechanism. This functionality is embedded within Hadoop’s resource management framework, YARN (Yet Another Resource Negotiator), which plays a pivotal role in maintaining the reliability of data processing tasks.

YARN is designed to monitor the progress of data processing jobs continuously. In the event of node failures or other disruptions, YARN’s automatic job recovery mechanism comes into play. When a task fails, YARN swiftly detects the issue and automatically restarts the failed task on another available node within the cluster. This proactive approach ensures that data processing jobs are not hindered by individual node failures, significantly enhancing system resilience.

The automatic job recovery mechanism is essential for ensuring job reliability. By automatically reallocating tasks to alternative nodes, YARN ensures that the overall job continues to progress despite failures. This not only minimizes downtime but also prevents the loss of processing time and resources, which is critical for maintaining the efficiency of data processing operations.

Additionally, YARN’s ability to dynamically manage resources across the cluster further supports this recovery mechanism. By efficiently distributing tasks and resources, YARN optimizes the use of available computational power, ensuring that failed tasks are promptly reassigned and restarted without causing significant delays.

The benefits of automatic job recovery are manifold. First, it enhances the fault tolerance of the Hadoop ecosystem by ensuring that data processing jobs can withstand and recover from node failures. Second, it ensures that tasks are completed successfully, maintaining the integrity and reliability of data processing workflows. Lastly, this mechanism reduces the need for manual intervention, allowing system administrators to focus on higher-level management tasks.

In summary, the automatic job recovery feature is a vital component of Hadoop fault tolerance in distributed computing. Through YARN’s robust monitoring and dynamic resource allocation capabilities, Hadoop ensures that data processing tasks are completed reliably and efficiently, even in the face of node failures or other disruptions. This mechanism not only enhances system resilience but also ensures the successful completion of data processing jobs, making Hadoop a dependable platform for large-scale data analytics.

Failure Detection and Notification

Failure detection and notification are essential components of Hadoop fault tolerance in distributed computing. Hadoop incorporates sophisticated mechanisms to alert administrators and users about potential issues within the cluster, ensuring timely intervention and resolution.

Hadoop’s monitoring tools, including the Hadoop Distributed File System (HDFS) and YARN (Yet Another Resource Negotiator), play a crucial role in maintaining cluster health. These tools continuously monitor the status and performance of the cluster, diligently detecting any failures or performance degradation. By keeping a constant watch over the system, they help in identifying problems as soon as they occur.

Administrators can leverage these monitoring capabilities by configuring alerts and notifications. These alerts provide timely updates about the cluster’s status, enabling administrators to take prompt and appropriate actions to address any issues. For instance, if a node fails or performance dips below a certain threshold, the system can automatically send notifications to the relevant personnel. This proactive approach minimizes downtime and ensures that the cluster continues to operate efficiently.

Moreover, the ability to receive timely alerts is not just about fixing immediate issues; it also allows for better resource planning and preventive maintenance. By understanding the nature and frequency of failures, administrators can anticipate potential problems and implement strategies to mitigate them before they escalate.

To ensure that the notifications are effective, it is crucial to configure them properly. Administrators should set up the alerts based on specific criteria relevant to their operations, such as node health, data block integrity, and resource utilization. Customizing these parameters ensures that the alerts are meaningful and actionable.

Ultimately, the integration of failure detection and notification mechanisms within Hadoop’s ecosystem fortifies its fault tolerance. By enabling continuous monitoring and timely alerts, Hadoop ensures that potential issues are promptly addressed, maintaining the stability and performance of the distributed computing environment. This proactive monitoring framework underscores Hadoop’s reliability and robustness in managing large-scale data processing tasks.

Data Integrity Checks

Hadoop performs data integrity checks at various stages of data processing to ensure that data remains consistent and accurate. These checks are critical for maintaining the reliability of data in distributed computing environments. One of the primary mechanisms Hadoop uses for verifying data integrity is checksum validation. This process involves creating a checksum for each data block stored in the Hadoop Distributed File System (HDFS) and periodically validating these checksums to detect and correct errors caused by hardware failures or data corruption.

Hadoop’s checksum validation mechanisms play a vital role in ensuring the accuracy of data blocks. When a data block is read or written, Hadoop computes its checksum and compares it with the stored checksum. If a discrepancy is detected, Hadoop can correct the error by retrieving a valid copy of the data block from another node, thanks to its data replication strategy. This continuous validation process helps maintain the integrity of the data stored in HDFS.

Additionally, Hadoop’s data processing frameworks, such as MapReduce and Apache Spark, include mechanisms for data validation and error handling. These frameworks are designed to handle large-scale data processing tasks while ensuring data integrity throughout the processing pipeline. By incorporating error detection and correction capabilities, MapReduce and Spark can identify and address issues that may arise during data processing, further enhancing the reliability of the system.

Ensuring Reliable Distributed Computing with Hadoop

Hadoop employs a range of fault tolerance mechanisms to ensure reliable and fault-tolerant distributed computing. These include data replication in HDFS, redundant task execution, automatic job recovery, failure detection and notification, and data integrity checks. Each of these mechanisms contributes to mitigating the impact of hardware failures, network issues, and other disruptions.

Data replication in HDFS ensures that multiple copies of data blocks are stored across different nodes, providing redundancy in case of node failures. Redundant task execution in the MapReduce framework ensures that tasks are retried on different nodes if they fail. Automatic job recovery, managed by YARN, restarts failed tasks on alternative nodes, ensuring job completion. Failure detection and notification mechanisms alert administrators to potential issues, enabling prompt intervention.

By integrating these comprehensive fault tolerance mechanisms, Hadoop safeguards data integrity and reliability. This enables organizations to process large volumes of data with confidence, ensuring that their data remains accurate and consistent. Consequently, organizations can derive valuable insights from their data, leveraging Hadoop’s robust and reliable framework for their big data processing needs.

More on Hadoop: Big Data with Hadoop: Top 10 FAQs Answered

Hadoop Fault Tolerance in Distributed Computing 5 Questions

How does HDFS replication ensure fault tolerance in Hadoop?

- HDFS replication ensures fault tolerance by maintaining multiple copies of each data block across different nodes in the cluster. This minimizes the risk of data loss in case of node failures, as the data is still accessible from other nodes.

What is the significance of redundant task execution in Hadoop?

- Redundant task execution in Hadoop’s MapReduce framework allows tasks to be executed on multiple nodes. If a task fails due to a node failure, Hadoop automatically retries the task on another available node, ensuring job completion and minimizing the impact of failures on data processing.

How does YARN contribute to fault tolerance in Hadoop?

- YARN contributes to fault tolerance by monitoring the progress of data processing jobs and automatically restarting failed tasks on alternative nodes. This automatic job recovery mechanism helps maintain job reliability and ensures that tasks are completed successfully despite node failures.

What role do data integrity checks play in Hadoop?

- Data integrity checks in Hadoop ensure that data remains consistent and accurate throughout the processing pipeline. Checksum validation mechanisms detect and correct errors caused by hardware failures or data corruption, maintaining the integrity of data blocks stored in HDFS.

How can failure detection and notification improve Hadoop’s reliability?

- Failure detection and notification mechanisms in Hadoop alert administrators about potential issues in the cluster. Continuous monitoring tools, such as HDFS and YARN, detect failures or performance degradation, allowing administrators to receive timely updates and take appropriate actions to address issues, enhancing the overall reliability of the Hadoop environment.

By understanding and implementing these fault tolerance mechanisms, organizations can ensure reliable and robust distributed computing with Hadoop, maintaining data integrity and enabling efficient processing of large volumes of data.